Building a Serverless Post Scheduler For Static Websites

Good news! I’m going on my first vacation since before the pandemic.

Prior to the pandemic, my wife and I would travel every couple of months to somewhere new. It was great. But the pandemic happened, we had our second kid, and life just kinda… changed.

My youngest daughter is almost 3 years old and has never been on a vacation. It’s time we changed it.

However, during this vacation dry spell, I’ve built up a significant “side hustle” that hopefully many of you know and love. Of course, I’m talking about Ready, Set, Cloud! I send out a weekly newsletter, publish a biweekly podcast, and write a blog post every week (right now I’m on a 64-week streak!).

Ideally, I’d like to take a vacation and not focus on content delivery. Also ideally, I’d like to continue delivering high-quality content uninterrupted to my dedicated followers.

In a normal situation, I’d throw in the towel and simply go on vacation. But I’m a stubborn guy. I am at least going to try to figure something out. I’ve been on an automation kick lately, so why not take advantage of what I’ve laid out already?

So I did. And I’ve made things better again. I built a serverless content scheduler for Ready, Set, Cloud to keep delivering to you even when I’m not here.

Greetings from the past!

The Problem With Static Site Generators

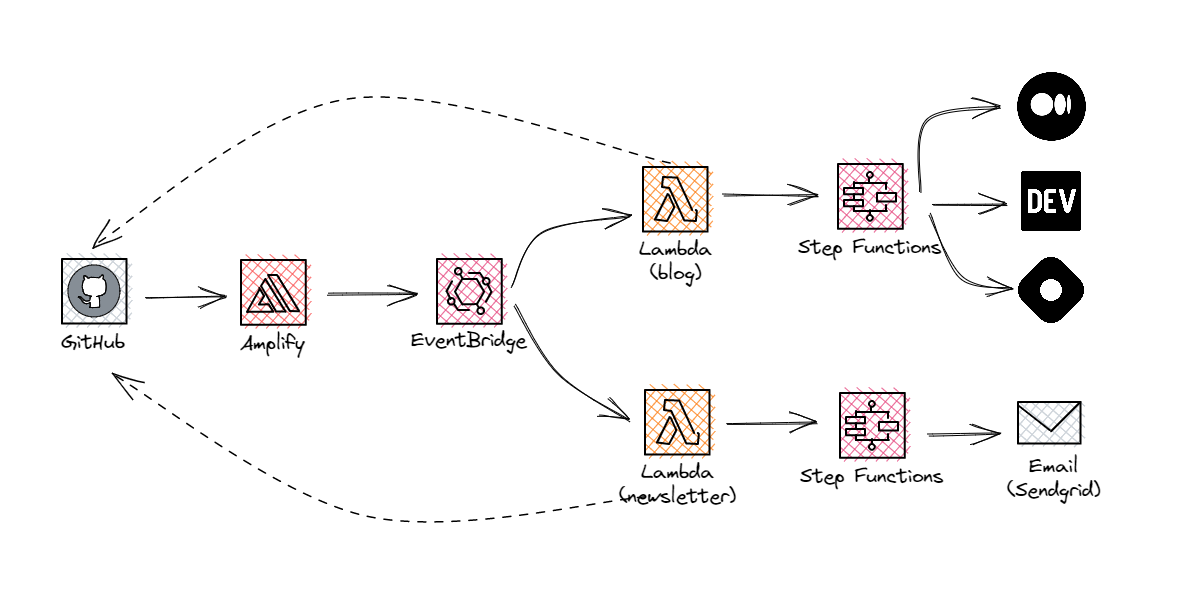

My blog is a static site hosted in AWS Amplify. There’s no dynamic content on it at all. I push Markdown files to the main branch of my site repo in GitHub and Amplify builds it with Hugo. Hugo renders the Markdown as HTML and drops it in an S3 bucket behind a CloudFront distribution.

When I publish content dated in the future, Hugo ignores it and the content does not make it to production. This makes sense, it’s dated for the future. But this leaves me with a problem.

If I go on vacation, I can’t just write my future articles, push to main, and be done. Unfortunately, there is no magic with static site generators that will automatically render future content once the date has passed. So I had to build something.

You’d think the solution is as easy as triggering a build on the date of the future content, right? Sigh

This is where something cool I did turned out to burn me a little bit.

Late in 2022, I built a cross-posting automation that takes my content, transforms it, and republishes on Medium, Dev.to, and Hashnode. Shortly after, I leveraged that work to automate sending the Serverless Picks of the Week newsletter.

For these automations to work, new files must be added in GitHub within a few minutes of the Amplify build.

If I scheduled a build to run in the future, my Lambda functions would find nothing to publish (because there are not newly added files) and my cross-posting and newsletter sending wouldn’t work.

const getRecentCommits = async () => {

const timeTolerance = Number(process.env.COMMIT_TIME_TOLERANCE_MINUTES);

const date = new Date();

date.setMinutes(date.getMinutes() - timeTolerance);

const result = await octokit.rest.repos.listCommits({

owner: process.env.OWNER,

repo: process.env.REPO,

path: process.env.PATH,

since: date.toISOString()

});

const newPostCommits = result.data.filter(c => c.commit.message.toLowerCase().startsWith(process.env.NEW_CONTENT_INDICATOR));

return newPostCommits.map(d => d.sha);

};

The code above shows how I use GitHub’s SDK, octokit, to list commits in my repository in the past few minutes. Full source code here.

With this simple trigger not a viable option, I had to build a slightly more involved solution.

The Fancy New Solution

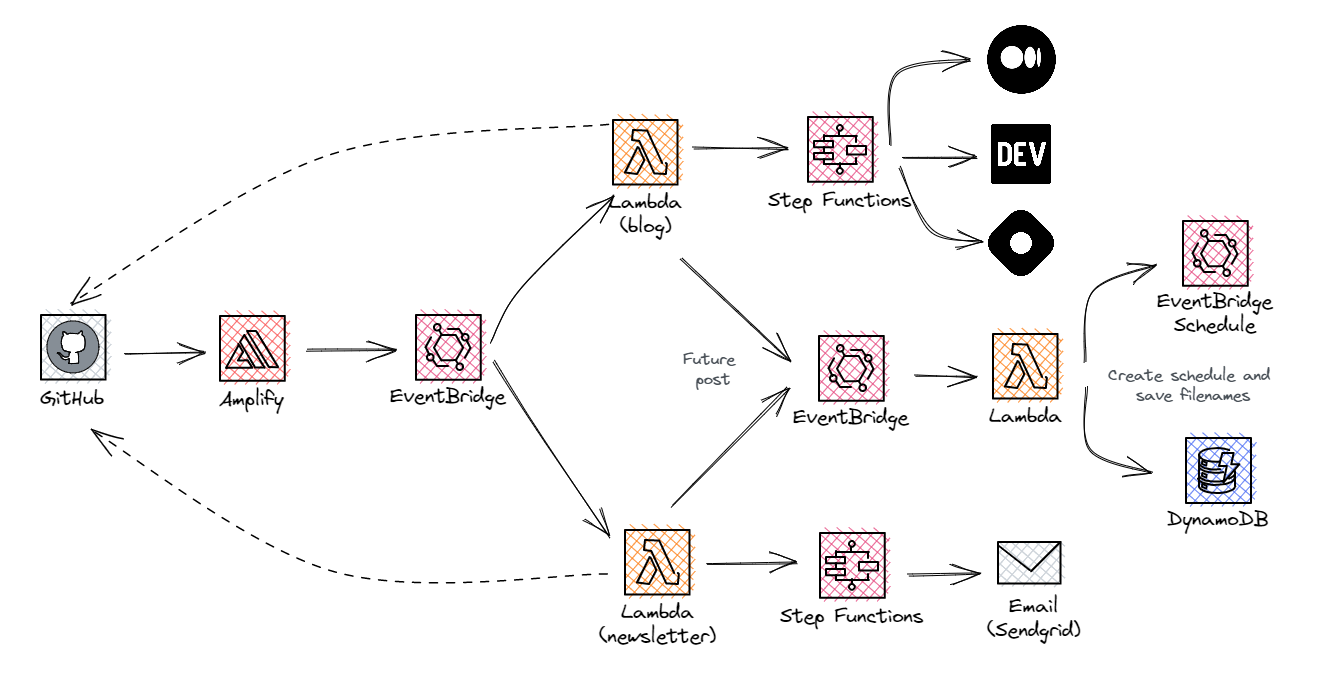

I’ve been wanting to try out the EventBridge scheduler since it released in November of 2022. It feels like a powerful capability and until recently I haven’t found a real-world use case to give them a try. Now that I finally have a reason to build one-time scheduled events, it was time to get my hands dirty.

The update to my architecture involves a decent amount of new code but also required some modifications to the existing code. I had to make a change to the Lambda function that identifies new content and triggers the Step Function workflows. Instead of finding new files and triggering a state machine, it now needed to find the new files, check if the date is scheduled for the future, and trigger different events accordingly.

const processNewContent = async (newContent) => {

const today = new Date();

const executions = await Promise.allSettled(newContent.map(async (content) => {

const metadata = frontmatter(content.content);

const date = new Date(metadata.data.date);

if (date > today) {

await scheduleFuturePost(content, date);

} else {

await processContentNow(content);

}

}));

};

The processContentNow function is the existing behavior. It triggers a state machine that will either cross-post my article or publish a newsletter, depending on what was pushed to the main branch. However, the scheduleFuturePost function is new. It fires an EventBridge event to create a EventBridge schedule that will trigger a build of my site.

When a future post is identified, a Lambda function will save create a one-time EventBridge schedule and save the new content filenames along with the schedule name to DynamoDB.

While this is cool, it’s only half the solution. We were able to get a schedule created and save the files to be cross-posted or sent in email, but we still needed a hook to process them. Like I said before, if we simply retrigger the Amplify build, none of the downstream logic would run because the Lambda function looks for recently changed files in GitHub. So I had to trigger another workflow off the Successful Build EventBridge event that Amplify publishes.

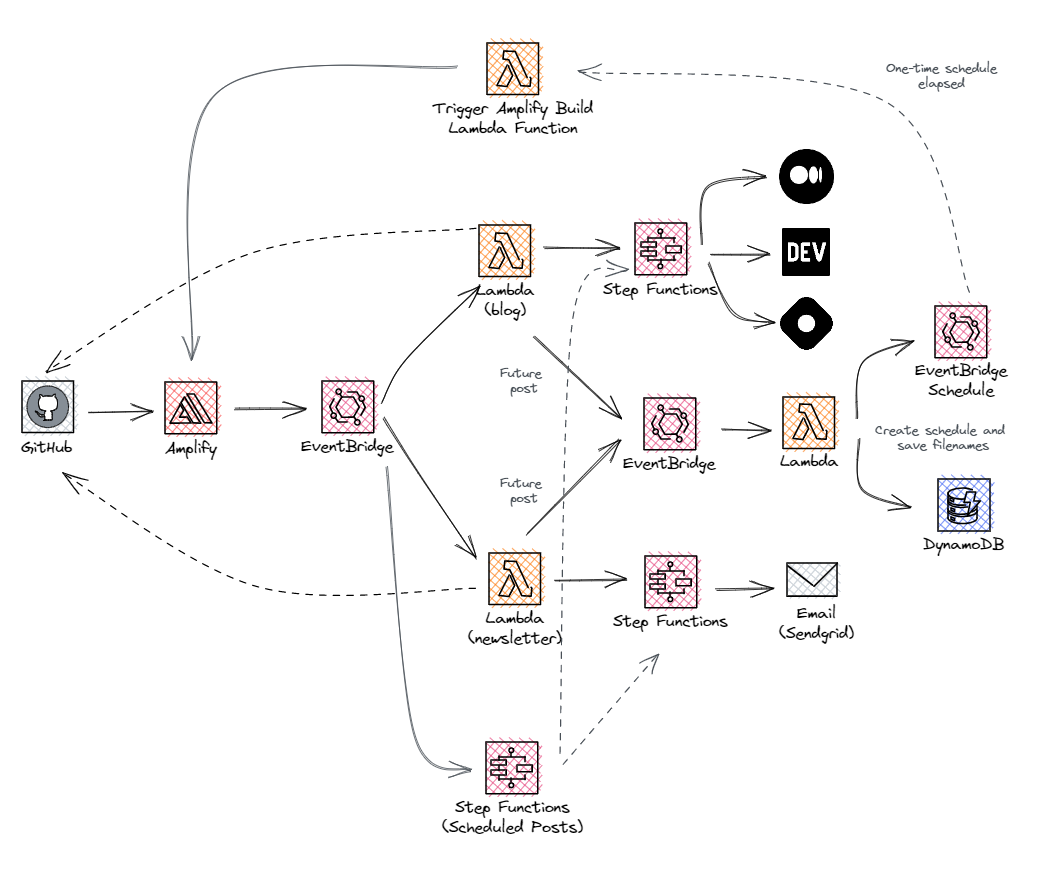

This is where the architecture diagram starts looking a little crazy. We have the one-time EventBridge schedule that triggers a Lambda function that starts an Amplify build. When the build completes, it fires off an EventBridge event indicating the build was successful. I added a new subscriber to that event to process all scheduled posts. The new subscriber kicks off a Step Function workflow to process the files that were saved in Dynamo.

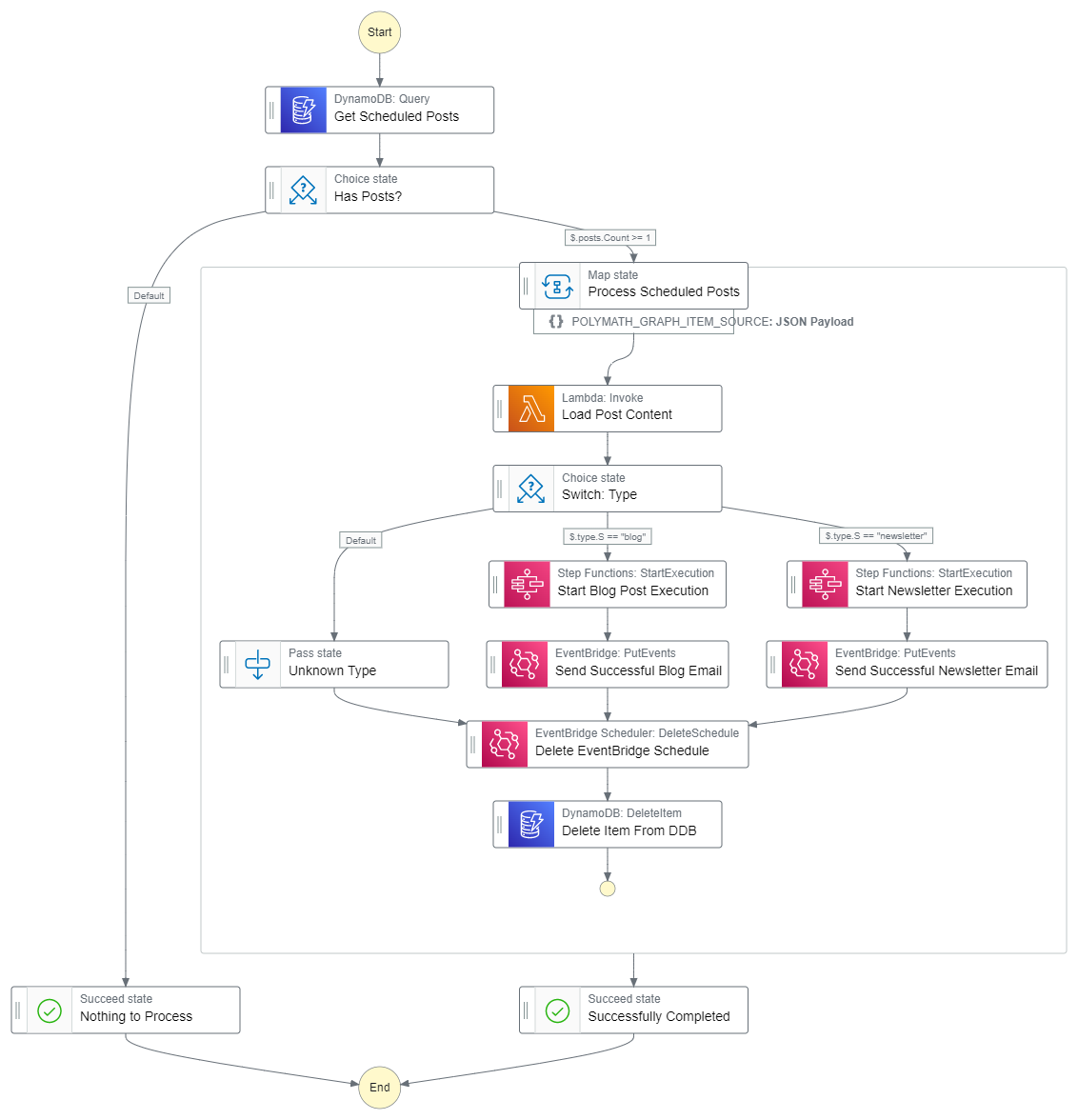

The state machine acts more or less like a switch statement.

Walking through the above workflow, this state machine will…

- Load scheduled posts out of DynamoDB. These records contain the file name that contains the content to be published.

- Determine what kind of post it is (set in the DynamoDB record)

- Trigger the appropriate state machine

- Send me an email notifying me the scheduled post worked

- Delete the one-time EventBridge schedule that triggered the build

- Delete the record for the scheduled post from DynamoDB

It’s important to note that EventBridge schedules do not delete automatically after they are run. With this in mind, I included the deletion in the state machine that is triggered by the schedule. The last thing we want is for hundreds (or thousands) of expired schedules hanging around. Looking at you, Luc!

Data Model

The data stored for a scheduled post is simple and accessed once before it is deleted. The only access pattern to retrieve the data is “get all scheduled posts before a specific day”, so I structured the model like this:

{

"pk": "scheduled-post",

"sk": "{ISO 8601 date when the post should be published}",

"fileName": "{path to the content in my GitHub repo}"

"commit": "{commit sha when the file was added}",

"type": "{blog or newsletter}",

"scheduleName": "{name of the eventbridge schedule that triggers the Amplify build}"

}

Since data is access so infrequently and I’ll never have more than a couple of posts scheduled at one time, it’s ok to have the static scheduled-post value for the pk. I wouldn’t run into hot partition issues unless I crossed 3000 RCUs or 1000 WCUs per second, which is not going to happen at this scale.

To query for the posts scheduled today or earlier, I can run a DynamoDB query in my state machine like this:

"TableName": "${TableName}",

"KeyConditionExpression": "#pk = :pk and #sk <= :sk",

"ExpressionAttributeNames": {

"#pk": "pk",

"#sk": "sk"

},

"ExpressionAttributeValues": {

":pk": {

"S": "scheduled-post"

},

":sk": {

"S.$": "$$.Execution.StartTime"

}

}

Notice in the KeyConditionExpression that I am using #sk <= :sk. Since the sk is an ISO 8601 formatted date, the less than or equal to operator will only pull back the records dated before the execution time of the workflow.

Simple enough!

Summary

This was a fun addition to the ever-growing backend of Ready, Set, Cloud. It introduced me to EventBridge schedules, taught me how to programmatically trigger Amplify builds, and had me critically thinking about how best to trigger downstream workflows.

More importantly, it enables me to provide you with uninterrupted content when I’m on vacation, win-win! This post was the first trial run of the functionality, so if you’re reading this now… it worked!

Building in public has been a game-changer for me. It’s enabled me to build things that are particularly useful to my audience, pushed my knowledge to new lengths, and helps keep me up to date with important new features from AWS. Most importantly, it helps keep coding fresh. Staying in practice and having fun with it makes me excited to sit down at my computer every day.

If you don’t already share what you’re working on with the community, there’s never been a better time. Throw your ideas out there, get some in return, and build your skills. It’s been the best decision of my career.

Happy coding!

Join the Ready, Set, Cloud Picks of the Week

Thank you for subscribing! Check your inbox to confirm.

View past issues. | Read the latest posts.